Planning For Success

Tue Jul 04 2023

Written By: Hack13

First off, I want to thank everyone for their ongoing support and for trusting us with your content. Moving platforms is scary and complicated and often comes with the worry that your data will be around for the long term. I want to thank you all for trusting us, and I hope we can continue to strengthen that trust.

Over this past weekend, we saw yet another mass exodus from Twitter and this time, we have seen more people take this more seriously, giving the Fediverse a proper chance. This influx saw many instances see massive loads and spikes of traffic overwhelming some, and many of us just being able to keep up the load but noticing the massive influx and excess of chatter across the wider Fediverse. Since we are all interconnected and federate with several instances, even when not directly suffering from heavy load, we indirectly ingest all the content from the other instances we federate with. We could handle the load fine, but we did see that it showed us some concerns with our growth and expecting not all of this new activity to stay this high but an actual increase to stay this time.

Stepping Up Our Game

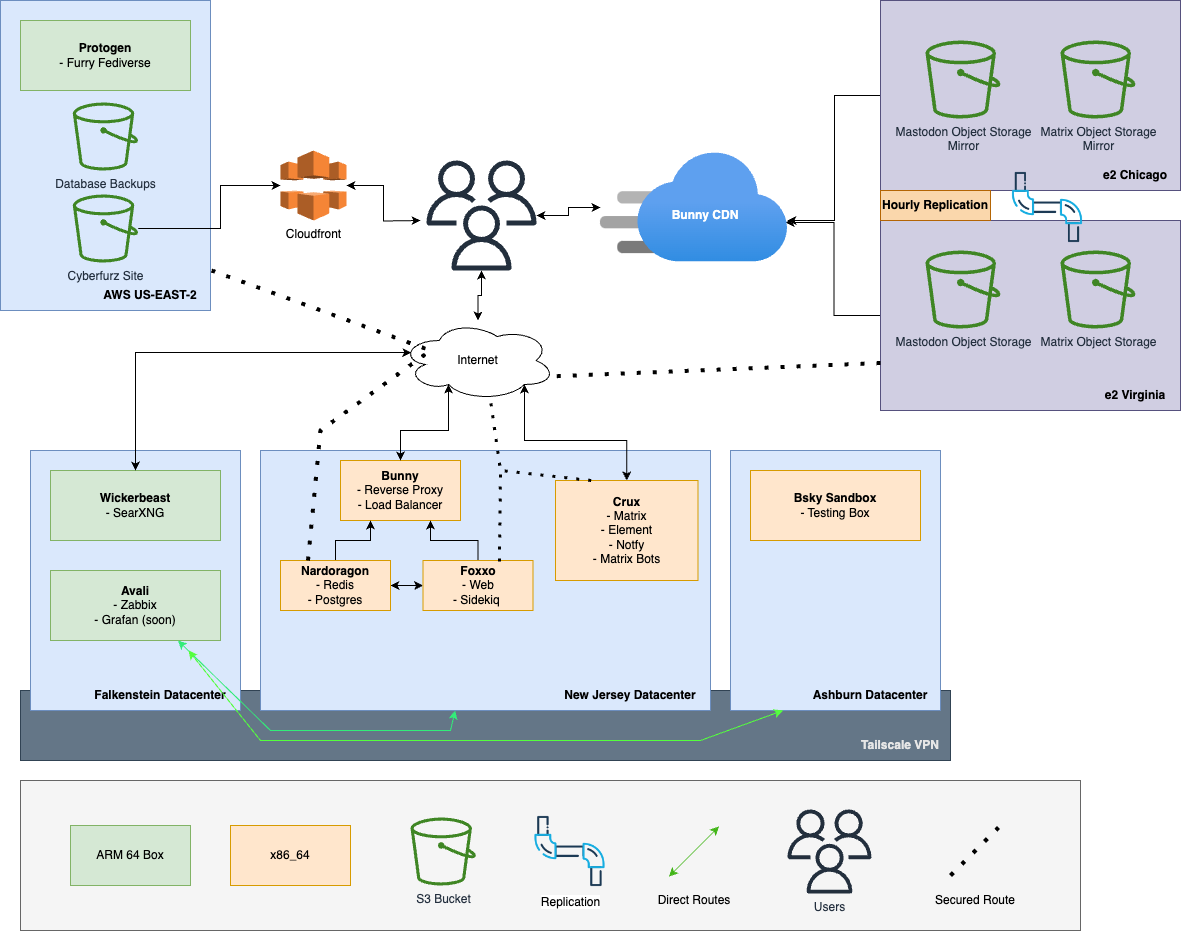

We already take several extra steps to ensure that we have redundancy and can survive unavoidable outages across our infrastructure. We set up bucket replication early last week when we saw that iDrive e2’s Virginia data center we are housed in had an outage. This caused us to be without access to our backlog of images temporarily… or store any new media users uploaded. Since then, we have spanned across their Virginia and Chicago locations, syncing every hour to ensure we don’t endure this type of outage again.

This weekend, we saw Cyberfurz Social stuck just on one box, with the excess data we were chunking through on the back end starting to impact CPU utilization. We could keep up, but it occasionally did cause minor slowdowns on the API and Web interface. So with that in mind, we decided to expand our infrastructure but not too crazy and still within our affordability.

We have now split Cyberfurz Social across three systems that we have happily named Bunny, Nardoragon, and Foxxo. Each of these systems handles a different part of the Mastodon Infrastructure. Bunny is our Rerver Proxy and Load Balancer, splitting traffic between the web interface and API running on Nardoragon and Foxxo. Nardoragon was the original box running everything, but it is now repurposed to house the PostgreSQL and Redis databases. Nardoragon also serves as a secondary web service and API backup instance when the load is excessive on Foxxo. Foxxo is another new addition to our broader infrastructure, which focuses on running the Sidekiq queues, part of Mastodon that handles federation and syncing all your posts’ communication with the rest of the Fediverse.

Attached below is an image of our updated infrastructure

Keeping Things Affordable

We leverage multiple deals to keep our costs low, such as buying servers at a yearly cost versus monthly. We also leverage providers that are market underdogs who try and provide service that undercuts the giants like AWS on things like AWS S3. We leverage iDrive, which offers an S3-like service called e2, which allows significant discounts when pre-buying storage in bulk. That is not to say we don’t still leverage AWS; we use their infrastructure just as well for serving our static site you are reading this blog post on, delivered through their Cloudfront service to an S3 bucket on the backend.

We also leverage smaller businesses that have been around a long time for our servers in New Jersey from RackNerd LLC, and the rest of our servers are from Hetzner Cloud offerings. This prevents us from being locked into a single vendor and securing our business across multiple providers. While we are spread across all sorts of providers, we keep our traffic secure by leveraging a Wiregaurd VPN solution powered by Tailscale to secure communication across all our systems.

Finally, we wouldn’t be able to keep up with all the hungry posters of media from all over uploading your short-form videos, images, and more without a proper CDN. For that, we are happy customers of Bunny, where we pay very reasonable pricing for their services to ensure if you are viewing a video or image from our server, it should load nice and snappy regardless of where in the world you are. We also pay a little more because we upgraded from their volume to the edge tier a few months ago.

Lastly, for backups. We nightly dump the database to sql.gz and store it on Amazon S3 and do incremental server backups with Borg Backup through BorgBase, which is fully encrypted locally and then transferred over an encrypted connection to their datacenters saved with our keys that they cannot decrypt as we hold the key.

This infrastructure currently runs us just shy of $500USD/year, not including our time spent patching and maintaining things.

Author Profile

Hack13 →

Pronouns: they/them

Short Bio:

A fox that enjoys web development and programming. They also enjoy playing in VR, as well as playing around with new tech.